Lucy Rana, Renu Bala

S.S Rana & Co. 8 min read

Artificial intelligence has been consistently transforming various industries across the world. However, the Automobile Industry is one, which has arguably seen the most developments in its processes and practice, due to development in AI. AI, for quite some time now, is being applied in different parts of the automotive value chain. AI is being used in the designing, manufacturing, production and post-production process.

Every facet of this industry is being benefited by this transformative technology. Furthermore, the most debated aspect of AI i.e its use in ‘driver assistance’ is quickly gaining momentum in different parts of the world – with Tesla inarguably being one of the modern pioneers of this aspect of AI.

Level 1 and Level 2 autonomous vehicles along with parking assistance and cruise control are gaining popularity for their use of AIs. If the current pace is maintained, within the upcoming 8-10 years, AI technology would be present in a majority of automotive applications and by the end of 2030, it is expected that 95-98% of new vehicles would have AI to some extent[1]. Autonomous vehicles are those that use artificial intelligence in some or all areas of functioning.

Level 1 vehicles include driver assistance, Level 2 includes partial automated driving, Level 3 incorporates conditional automated driving while level 4 & 5 cars have high/full automated driving. In light of such developments, it is not in the realm of the impossible that in the coming years, vehicles may be known by their software providers as opposed to being known by their hardware manufacturers. Many popular software based companies such as Google, Amazon, Uber have stepped into the realm of autonomous vehicles. One can look forward to observe the fascinating solutions that AIs could introduce to traditional problems which could even transform the functioning of the entire automotive sector.

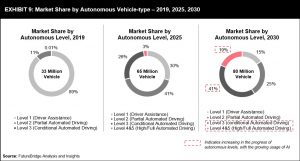

The following figure shows the estimated market share of autonomous vehicles in the year 2030. The pie chart also provides how these shares would divided amongst the different autonomous levels. It is predicted that by the year 2030, there would be 80million autonomous vehicles with over 40% of them falling under the scope of Level 3 (conditional automated driving).

Although an increase in the use of AIs can be seen, the implementation is not uniform amongst all countries. Countries such US and U.K have applied AI at larger scale as compared to countries such as Italy and India. The following figure depicts the State of AI Implementation at automotive organization in different countries.

Source: Capgemini Research Institute, AI in Automotive Executive Survey, December 2018–January 2019.

LAW AND AI

With increasing focus on software application, the tools provided by intellectual property regimes allow inventors to legally exploit the benefits of the invention. Patent law is the most commonly used mode of protection. Any invention that is capable of industrial application and has an inventive element is eligible for patent protection.

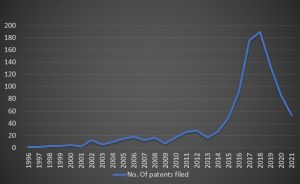

AI RELATED AUTOMOBILE PATENTS FILED IN INDIA

The development in this field is eivdent from the below graph, which shows the steady growth in such patent applications in India. Although, the noticeable dip in 2020-2021 can be attributed to the effects of COVID-19.

Source: InPASS- Indian Patent Advanced Search System

Some interesting example of patents filed in India in this field are as below :

- An intelligent driver assistance system for overtaking and blind spot detection:

- An intelligent system for assisting the drivers in blind spot detection and overtaking a vehicle in the same line. The system assists the driver to overtake by providing the safe distance from the moving vehicle

- Dialog processing server, control method for dialog processing server, and terminal.

- The present invention enables dialog between AIs. This dialog processing server is configured to allow the artificial intelligence with which the first terminal communicates to receive dialog content generated by the artificial intelligence with which the second terminal communicates, and vice versa, if said dialog conditions are met.

Publication No. IN202141019303

Date : 2021-04-27

Pub No. IN201947026757

Ethical Concerns

There are several ethical issues that arise when considering autonomous vehicles. The range of ethical concerns can be understood by imagining the number of moral decisions made by a driver on a regular basis. AI based systems have the added advantage of data-driven decision making but this does not guarantee a decision based on ethical values. An illustrative example of this can be found in the famous ethical issue raised in the trolley problem wherein a choice must be made between harming several people or sacrificing one initially “safe” person to save them. In a perplexing situation such as this , the AI maybe conflicted which could lead to dangerous consequences.

Furthermore, there is a fear of increase in unemployment due to the extensive of AI, however in reality AI does not diminish employment opportunities, it would only reduce employment in some job categories. As with any other technological wave, jobs are never eliminated, but rather there is a shift in the categories along with creation of entirely new sectors of employment.

In fact AIs would allow humans to concentrate on duties that require the application of human mind. A suitable way to implement AI technology is introduce it in a manner that helps humans perform their tasks rather than replace them. Research shows that when companies incorporate AIs that assist employees, they were more accepting and comfortable.

With regard to the usage of such technology, it’s the need of the hour that countries across the world build legislative frameworks that regulate and control the scope of AIs. There should be transparency in the AI decision-making process. The simple guideline is that AI systems which function according to algorithms that cannot be explained, should not be accepted. A transparent system not only helps users understand the process but also identify possible bias.

The system should be able to provide answers to all possible queries regarding its functioning. Tackling bias in the functioning of the AI is a multi-faceted concern. There are various levels at which the possibility of bias must be considered, data bias can be prevented by ensuring that the data that feeds an AI is unbiased and that different sets of variables that have been introduced are sensitive.

Algorithmic fairness is introduced to bring forth equalized impact, which can be checked by examining whether all persons belonging to a specific group are treated similarly.

Accidents involving self-driving cars

There are different approaches to defining liability in case of accidents involving self-driving vehicles. Most countries have not brought forth uniform laws to determine the liability of the parties involved, rather insurance companies and other mediators balance the liability of the parties according to each case. One basic approach is to hold the software manufacturer fully liable for the damage caused by an accident.

However, this can be a proposition which could possibly discourage the advancement of the technology – especially for start-ups. Tesla has stated that it would accept complete liability in cases where the accident is caused due to faulty design but it would not be willing to accept full liability in any other case.

Another theory states that the driver must take full responsibility as it is his/her duty to control the car, irrespective of the use of driver assistance. Currently, the Geneva and Vienna conventions do not allow any automotive vehicles to be used unless there is an operator present in the vehicle to take control in the case of an emergency.

It is presumed that the final control of the car is in the hands of the driver and hence it is his burden to steer the car away from any possible calamity. However, this position has also been criticized on the ground that accidents could happen due to the malfunctioning of the software and it would be unreasonable to expect the operator to manage a situation that is beyond his control.

Research conducted by different technological and legal institutions have reported that the optimal solution is to bring forth laws that deal with every possible situation differently – that is, involve variables based on circumstances. The idea is to consider possible combinations in the case of an accident and state the liability in such a situation. The possible factors that could influence the liability is the actual role performed by AI, the data processed by AI, possible harms if functions were performed through non – AI methods and the regulatory issues regarding AI operations.

Accountability

Considering the possibility of AI systems being applied and used in manner that could have detrimental effects, there needs to be an element of human accountability. In most cases, it would be impractical to hold a single person accountable for the deployment of a particular AI, since there are several entities involved in the development and use of the technology. Ideally, accountability should be divided among stakeholders according to the degree of control a stakeholder has over the development, deployment, use and evolution of an AI system. Contract law, which will be applicable to AI developers, deployers and users can be used for identification of liabilities.

Currently almost all applications of AI systems in legal systems, require human mediation and attention. Most AIs are derivative of human work and its functioning is determined by its developer and corporate owner and its easier to consider accountability in such cases, as all actions of the AI essentially come from humans. To ensure that AI systems can be regulated in case they ‘go-rogue’, it is recommended that developers build kill-switches to prevent unwanted consequences.

Developers accountability could be enforced through penalties if they fail to follow the necessary regulations and laws. However, the developer’s accountability and liability should not extend to situations where the developer has taken all reasonably foreseeable precautions while developing the AI system and where the systems were used in a manner not intended by the developer.

Data Protection

Automation of vehicles can be done only through data collection and processing. Artificial intelligence works on the understanding and exchange of data to provide maximum efficiency. Therefore, data protection law is of particular importance in the context of connected and autonomous mobility. One of the biggest concerns with regard to data protection is the question regarding ownership of data.

The users of autonomous vehicles would naturally have to provide a lot of information for accurate functioning of the vehicle, it must be then be considered whether the software companies owns this data. For example, the sensors used by Google’s self-driving cars include cameras, radars, thermal imaging etc, which is crucial information as it allows the AI to analyze and make predictions about the surrounding environment to function properly.

There are also several situations wherein manufacturers, insurers, and car sharing providers may be joint controllers when they jointly determine the means and purposes of processing certain personal data.

Autonomous vehicles generally use three types of data – i) owner and passenger information wherein identifying details of the owner and vehicle are stored for authentication and customization purposes ii) location data is used for navigation purposes iii) sensor data includes information collected by the various devices and sensors attached to the vehicle such as radar, thermal imaging devices, and light detection and ranging (LiDAR) which is used for decision making by the AI.

With regard to data protection, privacy by design is a concept that ensures that technology is a developed from the outset in data protection friendly manner from the outset. It is a legal requirement under the GDPR which states that only the necessary personal data should be stored for the least amount of time.

However there is a pressing need for specific laws in this context, given the importance of data protection and cyber-attacks. Accidents have happened due to cyber security attacks (for example, hackers unlocked the BMW AV remotely and 2.2 million cars were recalled; attacks on Volkswagen cars which recalled about 100 million vehicles, etc). Similarly, an attack on the Tesla electric car resulted in software updates for the car operating system. [2]

AI and Legal Personality

Legal personhood attribution for AIs can be done according to the characteristics of different legal domains. For example, civil liability maybe imposed against the AI in case of disputes while restricting the imposition of criminal liabilities to natural persons. Such classification would be reasonable as criminal law emphasizes on moral elements.

Moreover the application of civil law upon AIs, in the same way that its applicable for corporations, would allow the legal system to better address the liability concerns regarding the use of AIs.

In 2017, Saudi Arabia was the first country to grant citizenship to a robotic AI named ‘Sophia’. Countries such as Estonioa, Malta and the European Union have started drafting laws for considering the legal personality of AIs. However, there is a fear that “attributing electronic personhood to robots risks misplacing moral responsibility, causal accountability and legal liability regarding their mistakes and misuses”.

Google has expressed four main concerns with regard to granting legal personhood to an AI:

- It is unnecessary as the current legal frameworks already hold human persons and corporations accountable

- It would be impractical as machines do not have a consciousness to be admonished

- Moral responsibility is essentially a human characteristic

- Abusive persons may use AIs to shield themselves when facing legal liability.

Thus, there are many concerns which need to be answered from a legal, ethical and moral perspective, prior to making substantive legislation regarding the subject matter.

Legality of Autonomous Vehicles In India

The government of India has taken a stand against the introduction of driverless cars in India citing job loss as the primary reason. Further, the government believes that the infrastructure required for such technologies is still not available in the country. However, the government is in favor of all other possible uses of AI in the automobile sector.

According to the discussion paper on National Strategy for Artificial Intelligence, if AI is to be introduced to the country on a larger scale then there should be a framework which adheres to the unique needs of India. An important area for expansion is the exporting of autonomous vehicles along with research based modifications.

Free Market

The use of AIs in the automotive industry does not benefit the market and the final producers alone, rather it introduces a multitude of related technology based markets. Similar to the functioning of the conventional automotive market, where modifications and substitutes are available for different parts, the market for autonomous vehicles must also allow cross application of software and hardware. The legal framework must prevent possible restrictive practices by companies that would reduce interoperability and customization.

A dynamic legislative structure which ensures the standardization of AI development and application for different purposes would allow consumers to benefit from the healthy competition between the developing entities. The standardization process as set by Standard Setting Organizations may specify Standard Essential Patent (SEP) holders to be compliant with various technical requirements. By establishing rules and specifications that facilitate interconnectivity and interoperability of products and services, standards facilitate the efficient functioning of markets and encourage innovation.

Conclusion

The use of AIs in the automotive sector would definitely benefit all parties involved in the production, post-production and service of these vehicles. However implementation of this powerful technology must be done after due consideration of ethical and financial concerns. Countries like India need to, not only improve the infrastructural facilities for the application of AI but also develop a legal framework that addresses liability concern. A statutory structure that clearly defines stakeholder liability would smoothen the adoption of AI in the automotive industry.

For more information, please write to us at: info@ssrana.com